Enhancing Asynchronous Transaction Monitoring: Implementing Distributed Tracing in Apache Camel Applications with OpenTelemetry

1 Introduction

In today’s dynamic landscape, Distributed Tracing has emerged as an indispensable practice. It helps to understand what is under the hood of distributed transactions, providing answers to pivotal questions: What comprises these diverse requests? What contextual information accompanies them? How extensive is their duration?

Since the introduction of Google’s Dapper, a plethora of tracing solutions has flooded the scene. Among them, OpenTelemetry has risen as the frontrunner. Other alternatives such as Elastic APM and DynaTrace are also available.

This toolkit seamlessly aligns with APIs and synchronous transactions, catering to a broad spectrum of scenarios.

However, what about asynchronous transactions? The necessity for clarity becomes even more pronounced in such cases. Particularly in architectures built upon messaging or event streaming brokers, attaining a holistic view of the entire transaction becomes arduous.

Why does this challenge arise? It’s a consequence of functional transactions fragmenting into two loosely coupled subprocesses:

Hopefully you can rope OpenTelemetry in it to shed light.

I will explain in this article how to set up and plug OpenTelementry to gather asynchronous transaction traces using Apache Camel and Artemis. The first part will use Jaeger and the second one, Tempo and Grafana to be more production ready.

All the code snippets are part of this project on GitHub. (Normally) you can use and run it locally on your desktop.

2 Jaeger

2.1 Architecture

The SPANs are broadcast and gathered through OpenTelemetry Collector. It finally sends them to Jaeger.

Here is the architecture of such a platform:

2.2 OpenTelemetry Collector

The cornerstone of this architecture is the collector. It can be compared to Elastic LogStash or an ETL. It will help us get, transform and export telemetry data.

For our use case, the configuration is quite simple.

First, here is the Docker Compose configuration:

| |

and the otel-collector-config.yaml:

| |

Short explanation

If you want further information about this configuration, you can browse the documentation.

For those who are impatient, here are a short explanation of this configuration file:

- Where to pull data?

- Where to store data?

- What to do with it?

- What are the workloads to activate?

2.3 What about the code?

The configuration to apply is pretty simple and straightforward. To cut long story short, you need to include libraries, add some configuration lines and run your application with an agent which will be responsible for broadcasting the SPANs.

2.3.1 Libraries to add

For an Apache Camel based Java application, you need to add this starter first:

| |

In case you set up a basic Spring Boot application, you only have to configure the agent (see below).

2.3.2 What about the code?

This step is not mandatory. However, if you are eager to get more details in your Jaeger dashboard, it is advised.

In the application class, you only have to put the @CamelOpenTelemetry annotation.

| |

If you want more details, you can check the official documentation.

2.3.3 The Java Agent

The java agent is responsible for instrumenting Java 8+ code, capturing metrics and forwarding them to the collector.

In case you don’t know what is a Java Agent, I recommend watching this conference.

Its documentation is available on GitHub. The detailed list of configuration parameters is available here. You can configure it through environment, system variables or a configuration file.

For instance, by default, the OpenTelemetry Collector default endpoint value is http://localhost:4317.

You can alter it by setting the OTEL_EXPORTER_OTLP_METRICS_ENDPOINT environment variable or the otel.exporter.otlp.metrics.endpoint java system variable (e.g., using -Dotel.exporter.otlp.metrics.endpoint option ).

In my example, we use Maven configuration to download the agent JAR file and run our application with it as an agent.

Example of configuration

| |

The variables in comment (e.g., otel.traces.sampler) can be turned on if you want to sample your forwarded data based on a head rate limiting.

Before running the whole application (gateway, producer,consumer), you must ramp up the infrastructure with Docker compose. The source is available here.

| |

You can now start both the producer and the consumer:

| |

| |

The gateway can also be turned on and instrumented in the same way. You can run it as:

| |

2.3.4 How is made the glue between the two applications?

The correlation is simply done using headers. For instance, in the consumer application, when we consume the messages as:

| |

I logged on purpose the traceparent header.

| |

It allows to Jaeger to correlate our two transactions.

For your information, here are all the headers available while consuming the message

| |

2.4 Dashboard

To get traces, I ran this dumb command to inject traces into Jaeger:

| |

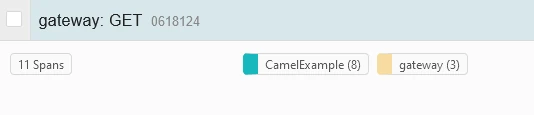

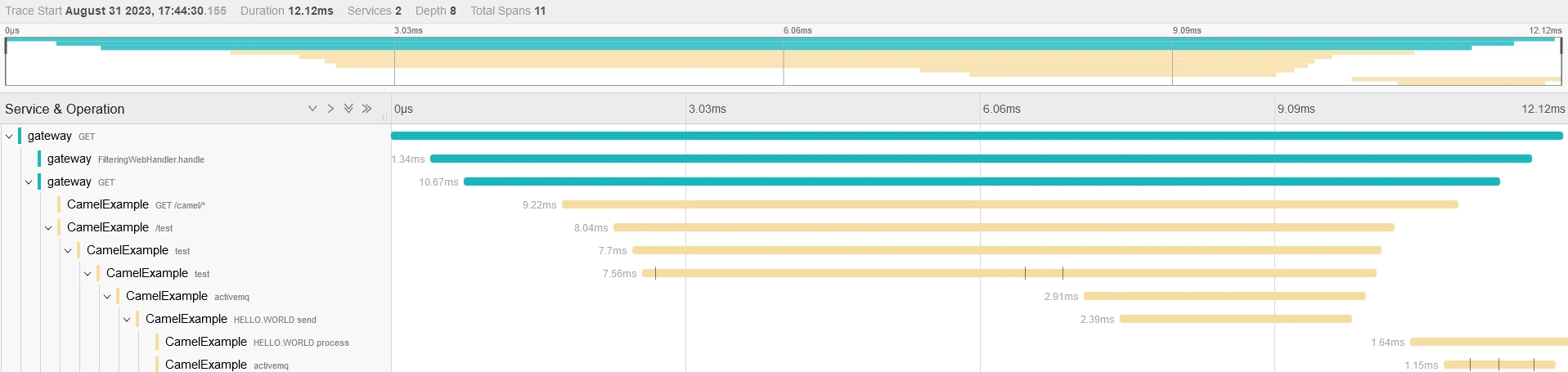

Now, you can browse Jaeger (http://localhost:16686) and query it to find trace insights:

If you dig into one transaction, you will see the whole transaction:

And now, you can correlate two sub transactions:

3 Tempo & Grafana

This solution is pretty similar to the previous one. Instead of pushing all the data to Jaeger, we will use Tempo to store data and Grafana to render them. We don’t need to modify the configuration made in the existing Java applications.

3.1 Architecture

As mentioned above, the architecture is quite the same. Now, we have the collector which broadcast data to Tempo. We will then configure Grafana to query to it to get traces.

3.2 Collector configuration

The modification of the Collector is easy (for this example). We only have to specify the tempo URL.

| |

3.3 Tempo configuration

I used here the standard configuration provided in the documentation:

| |

3.4 Grafana configuration

Now we must configure Grafana to enable querying into our tempo instance. The configuration is made here using a configuration file provided during the startup

The datasource file:

| |

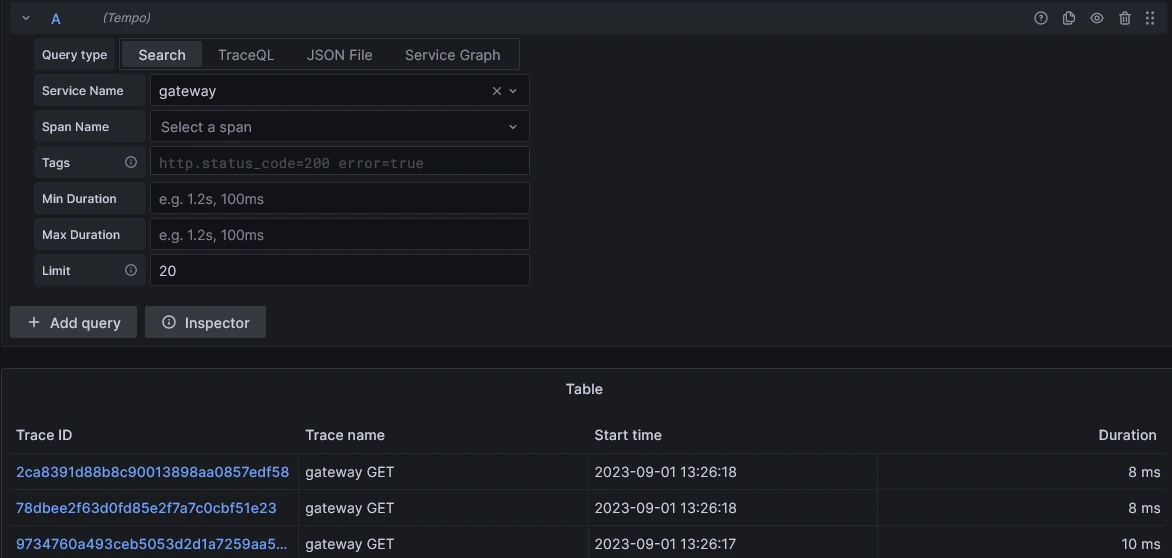

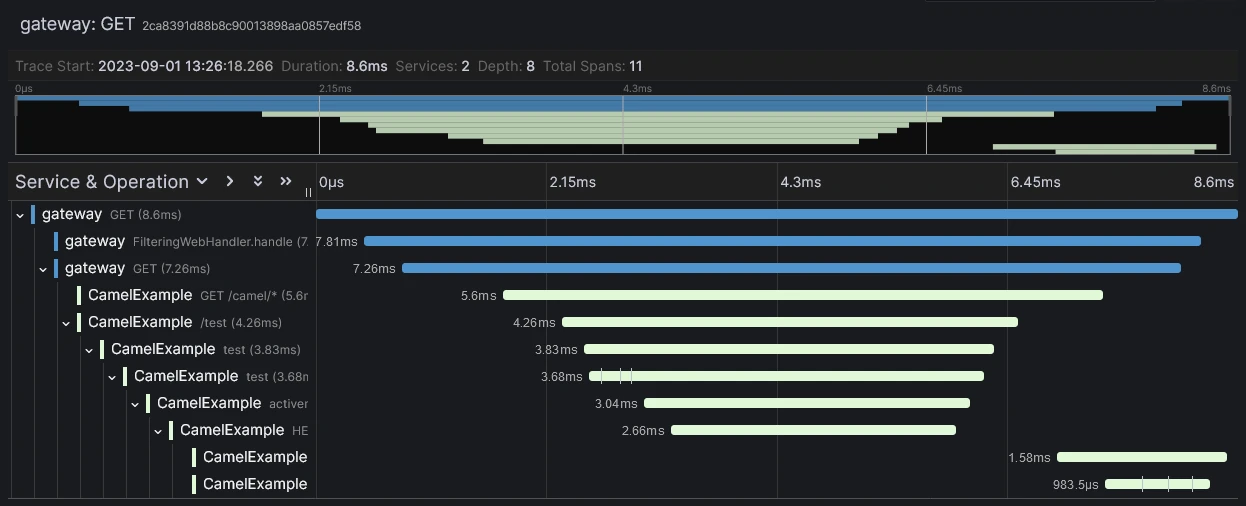

3.5 Dashboard

As we have done before, we must start the infrastructure using Docker Compose:

| |

Then, using the same rocket scientist maven commands, we can run the same commands and browse now Grafana (http://localhost:3000) to see our traces:

4 Conclusion

We saw how to highlight asynchronous transactions and correlate them through OpenTelemetry and Jaeger or using Tempo & Grafana. It was voluntarily simple.

If you want to dig into OpenTelemetry Collector configuration, you can read this article from Antik ANAND (Thanks to Nicolas FRANKËL for sharing it) and the official documentation. A noteworthy aspect of OpenTelemetry lies in its evolution into an industry-standard over time. For instance,Elastic APM is compatible with it.

I then exposed how to enable this feature on Apache Camel applications. It can be easily reproduced with several stacks.

Last but not least, which solution is the best?

I have not made any benchmark of Distributed Tracing solutions. However, for a real life production setup, I would dive into Grafana and Tempo and check their features. I am particularly interested in mixing logs, traces to orchestrate efficient alerting mechanisms.